Update: the altperl scripts seem to take away the need for most of the steps here. Take a look at the post Slony1-2.0.0 + PostgreSQL 8.4devel for an example for how to use them.

When I first installed Slony-I to replicate between two PostgreSQL servers, it was very confusing. Now I am somewhat less confused (proven by the fact that the replication is actually working :-)) and would like to share some things I've learned along the way.

The first thing to understand is how Slony-I works on the high level:

- When you install Slony in a database, it creates some tables in a separate schema.

- It also adds some triggers to your tables the following way: (a) on the master it adds triggers which log the changes after modifying the table and (b) on the slave it adds triggers which disallow changing the data (this is logical, but can be surprising)

- When data gets modified on the master, these triggers log the changes to the slony schema.

- Each slave needs to run a daemon (slon) to fetch these changes from the slony schema on the master, write them locally and delete (to keep the slony schema from growing indefinitely)

A limitation of the Slony system is that you can't change the list of tables or their structure dynamically. To do this you must break the synchronization, perform the changes and let Slony copy all the data over. This sounds really bad, but Slony is quite fast actually, copying over a ~30GB dataset in ~4 hours (on a local 100MB network).

Now with these out of the way, how do you actually start a replication?

The first thing you need to make sure of is that both servers can connect to each other. You must be able to go to both servers and successfully execute the following command:

psql -h [the other server] -U [user] -d [DB name]

Now that you made sure that you have connectivity, you must generate an initialization file and feed it to slonik (the slony management tool). You can do this fairly easily if you have a regular structure for your tables. You have the query for it below:

SELECT '#!/bin/bash

slonik <<_EOF_

cluster name = slon_cluster_a;

node 1 admin conninfo = ''dbname=dbname host=first_host user=u password=p'';

node 2 admin conninfo = ''dbname=dbname host=second_host user=u password=p'';

init cluster (id = 1, comment = ''master a'');

create set (id = 1, origin = 1, comment = ''fdb'');

' || array_to_string(ARRAY(SELECT

CASE

WHEN c.relkind = 'S' THEN

'set add sequence (set id = 1, origin = 1, id = ' || (SELECT SUM(1) FROM pg_class WHERE pg_class.relname <= c.relname) ||

' full qualified name = ''' || n.nspname || '.' || c.relname || ''', comment = ''Sequence ' || c.relname || ''');'

WHEN c.relkind = 'r' THEN

'set add table (set id = 1, origin = 1, id = ' || (SELECT SUM(1) FROM pg_class WHERE pg_class.relname <= c.relname) ||

' full qualified name = ''' || n.nspname || '.' || c.relname || ''', comment = ''Table ' || c.relname || ''');'

END

FROM pg_class c

LEFT JOIN pg_namespace n ON n.oid = c.relnamespace

LEFT JOIN pg_tablespace t ON t.oid = c.reltablespace

WHERE

(c.relkind = 'r' OR c.relkind = 'S')

AND n.nspname = 'public'

AND NOT(c.relname LIKE '%_old')

AND NOT(c.relname = 'foo')

ORDER BY c.relname ASC), E'\n') ||

'store node (id = 2, comment = ''slave a'');

store path (server = 1, client = 2, conninfo = ''dbname=dbname host=first_host user=u password=p'');

store path (server = 2, client = 1, conninfo = ''dbname=dbname host=second_host user=u password=p'');

store listen (origin = 1, provider = 1, receiver = 2);

store listen (origin = 2, provider = 2, receiver = 1);

subscribe set ( id = 1, provider = 1, receiver = 2, forward = no);'

Now I know that this looks like a monster query, but it is actually quite simple. It creates a bash script (I'm assuming that the DB servers are running Linux) composed out of three parts:

- The initial part (make sure that you set up the paths correctly here)

- The middle part which differs depending on the type of object (table or sequence - sequences need to be synchronized too because they (1) are outside of transactions and (2) can be changed explicitly)

- The final part. Again, make sure that the connection strings are correct.

Regarding the middle part: most probably you will want to fiddle with the filtering criteria. For example the above query includes tables / sequences which:

- Are in the public schema (this is important because we have the schema created by slony)

- Don't end in _old

- Are not named foo

Save the output of the query in a file on one of the servers (either the master or the slave), give it executable permissions (chmod +x slony_setup.sh) and run it (./slony_setup.sh). You only have to run it once (ie. either on the master or on the slave) because it will set up the triggers / tables / etc on both machines (if the connection strings are correct - if they are not you will have other troubles as well, so go and correct them :-)).

A remark about choosing the (PostgreSQL) user under which to run Slony: for simplicity it is recommended to use postgres or an other superuser, because it has to make modifications to the DB (add schemas, create triggers, etc). From what I understand it is possible to use a locked down user (ie one which has minimal privileges), but it is a painful procedure.

Now go to the client, login as user "u" (the one defined in the slony setup script) - you can do this indirectly for example by doing sudo su u if you have sudo rights - and start the slon daemon: slon slon_cluster_a dbname=dbname user=u. You should see a lot of message go by. If there aren't any obvious errors, the initial data copying has begun. You can see the status of Slony by executing the following query on the slave (of course you should replace _slon_cluster_a with the correct schema name):

SELECT * FROM _slon_cluster_a.sl_status

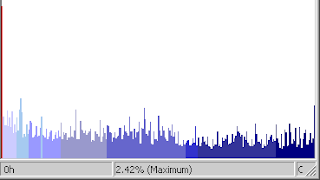

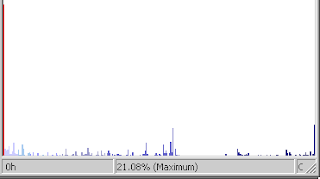

What you are interested in is the st_lag_num_events column. Initially this will increase, until the copying of the data is done and the slave had a chance to catch up with the master. After that it should hover around 0. Sometimes you will see spikes (I assume that these appear during autovacuum), but it always should tend towards 0.

Now that you've set up the replication, how do you tear it down? (because for example you want to change the tables). The easiest way I found was using pgAdmin.

First stop the slon process on the slave.

Now in pgAdmin go to your database, and you should see a "Replication" entry, similar to the one shown below:

You should delete the entry on both the master and the slave (this is one of the rare cases when working with slony when you need to operate independently on the two machines - of course probably there is some command-line script which does this for you).

Now do the changes on the master and export the exact database structure to the slave. Again, for me the easiest method was to drop and recreate the database on the slave and after that export the schema from the master to the slave like this:

pg_dump -h [master] -s dbname|psql dbname

The -s tells pg_dump to only dump the schema, not the data.

Now regenerate and re-run the slonik script and start the slon daemon.

That's all folks. Hope it helps somebody who is struggling to understand slony, like I was.

PS. An interesting side-effect of installing slony on the slave is that other triggers get dropped (and need to be recreated). This can be important in some scenarios, for example: lets say that you have a table A and a table A_log which contains the operations done on table A. A_log is updated by a trigger on A. Now if you use Slony, you have two options:

Let Slony synchronize both tables. This is the easiest, however it can happen that for short period of times you will have inconsistencies between A and A_log on the slave if you are using the "READ COMMITTED" isolation level (which is recommended in most of the cases) because Slony might have synchronized one of the tables, but not the other. If this is acceptable for you, great.

If this is not acceptable, you have to do two things: first, exclude the table A_log from the synchronization (by adding it to the exclusion criteria in that huge select at the beginning of the article). Second, after you executed the slon script, you need to add the trigger back on the slave for the "A" table.

PS PS. What I presented here is a very rudimentary configuration and can be substantially improved. For example Slony has support for the following scenario: the master goes away and now the slave should become the master and when later the master comes back (is repaired for example), it can become the new slave ("switch"). From what I understand some changes need to be done to the above configuration to support this (fairly important scenario).

Update: Thanks to one of the commenters I took a closer look of EnterpriseDB and on their site found a link to the following documentation which describes the structure and intention of Slony: Slony-I - A replication system for PostgreSQL - Concept. It is a short read (~17 pages) and very useful for understanding Slony.

Update: Here is an other tutorial for Slony. This is pretty much the same as the samples on the Slony website, however I found it to be easier to understand (this is also possibly because in the meantime I've learned more about Slony, but give it a try). It also gives examples on how to use some helper scripts included in Slony to make your life easier.

Updates:

Update: it seems that slony requires the existence of the public schema, even if it isn't installed in it.

Update: Setting up Slony cluster with PerlTools - a much simpler method than the one described by me :-)