This is again one of those topics which I like to rant about, so I give you the short version: when you see a number, question it! Most of the numbers thrown at us in different media can be disproven quite easily and it is our responsibility as people not to just repeat whatever we’ve heard, but rather stop and think a little about it (of course I’m not immune to this myself, since I’ve just fallen into this trap when reading the “Contemplating Financial Trading At Picosecond Resolution” on Slashdot, only to see the very insightful comment: light travels 3mm in a picosecond – yes I’ve done the math - so this article is pure BS).

Offtopic: why do sayings in different languages have so much in common? For example we have the “beating the dead horse” expression in English and in Hungarian we would say somebody is talking about is “horse made of branch” (vesszoparipa). Ain’t it interesting?

Getting back to my rant :-). I’ve seen an article recently about a local (Romanian) affiliate program: eMAG Profitshare 2010. I applaud them for their openness and it also gives us the possibility to do a quick calculation. They say that they’ve given out 463 000 RON (~109 905 EUR / 153 499 USD) to 8690 sites.

Does it sound like a lot? Yes. Is it a lot for each individual site? Unlikely. Lets do a quick math: assuming that each site gets the same share (a very simplistic assumption) we have: 109 905 EUR / 8690 site = ~13 EUR per site / per year (these are yearly figures for 2010) so around 1 EUR (!) per site per month (!).

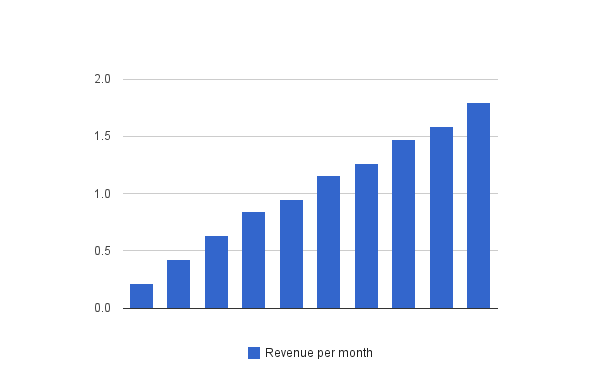

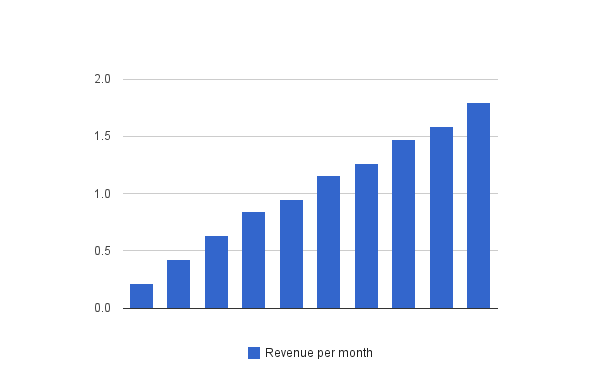

Ok, so be more real. You have a big fanbase, so you should be in the top sites as revenue. Lets consider a binomial distribution of the sites and do a little chart with Google Docs:

What you see here is the revenue per month for a site in a certain category (categories are from 1 to 10, 1 being the lowest traffic one and 10 the highest traffic one). The number is in EUR. The conclusion: this business model is a very poor revenue source for the individuals participating, but probably a very good marketing avenue for companies (I assume that the cost for companies is around the same as doing a ad campaign, but the returns must be much better – not to mention the google juice they must be getting from this referrals!).

PS: in the name of transparency, you can see the sheet I used for calculation here.